// Trusted by developers at

// Trusted by developers at

Searching for a README in a Node.js project? Claude Code can index thousands of node_modules files unnecessarily, burning through your token limits fast.

Sits between Claude Code and Anthropic API

voltige intercepts API requests, analyzes them for wasteful context using lightweight fine-tuned LLMs, and optimizes them in milliseconds before forwarding to Anthropic, saving you tokens without quality loss.

Sign up, get your API key and configure Claude Code:

export ANTHROPIC_BASE_URL=https://proxy.voltige.ai export ANTHROPIC_CUSTOM_HEADERS="voltige-authorization:YOUR_API_KEY"

Takes under 2 minutes to set up

API key never stored. Optimization layer only, your key passes through to Anthropic.

Lightweight LLMs optimize in milliseconds, faster than token savings.

Maintains prompt cache integrity, critical for agent performance.

Validated against SWE-BENCH, HumanEval, MBPP. Reduces noise, improves focus.

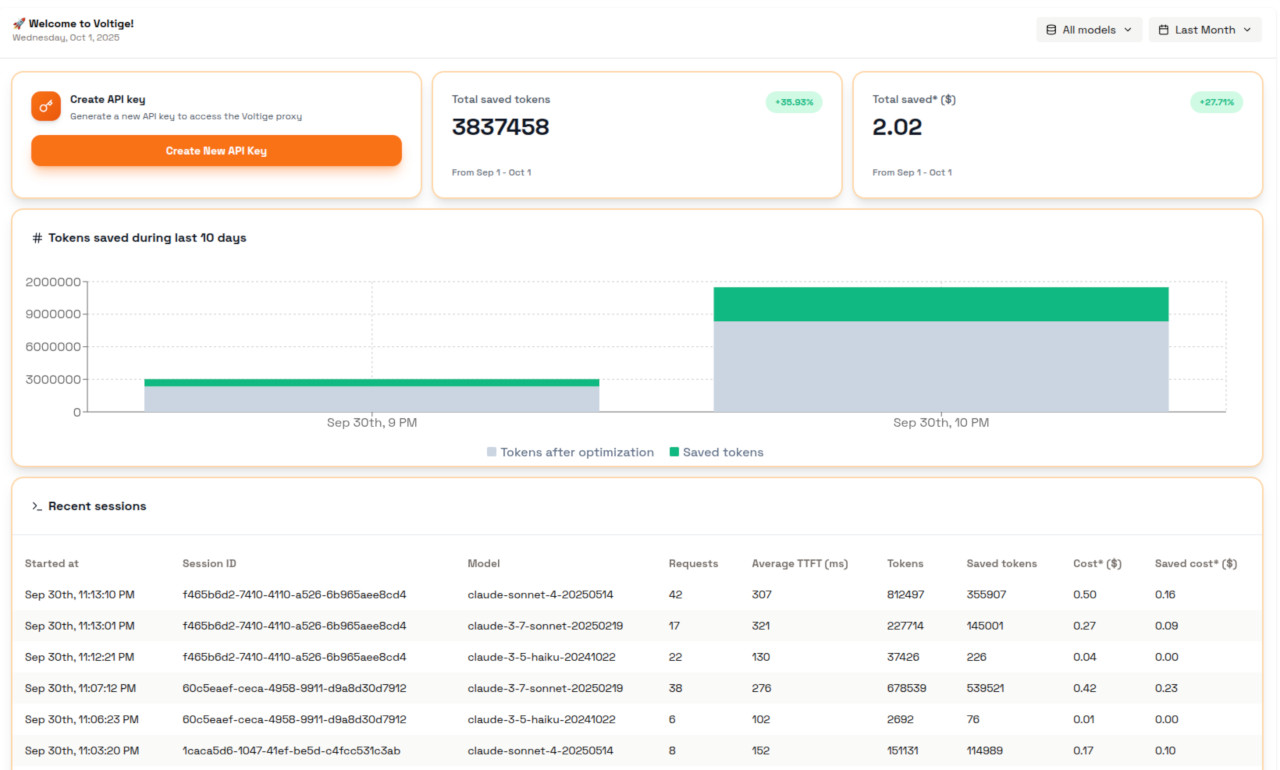

Real-time insights into API usage and optimization impact

Token usage, savings, optimization rates with per-session breakdowns

Exact Claude plan savings and ROI tracking over time

API patterns, model usage, and optimization impact analysis

Aligned with your Claude plan. Pay less, code 50% more.

2-week free trial

Prices shown don't include applicable tax.

No. Optimizations are lightning-fast, milliseconds. The time saved from processing fewer tokens far exceeds the optimization overhead. Responses often feel faster because Claude has less context to parse.

Yes! All optimizations maintain cache integrity, critical for coding agents. We never break cache boundaries or reorder content that would invalidate caches.

No. Every optimization is tested against SWE-BENCH, HumanEval, MBPP and custom benchmarks. Reducing irrelevant context often improves performance by helping Claude focus on what matters.

Cache reads still cost money, typically 1/10 of input tokens. Since context is resent every turn (often dozens per session), even cached costs compound quickly. Cache writes also cost more than regular inputs. By removing unused tokens, voltige reduces both cache read and write costs while improving agent performance through better-targeted context.

The /compact command is an expensive and slow process that compresses conversation history into a summary when you're running out of context space. voltige works proactively on every request, optimizing in milliseconds by removing wasteful tokens before they're sent, preventing the need for compaction altogether.

No. We forward your optimized requests to Anthropic with your API key, just like a local proxy. Your key is never stored. We're an optimization layer, not a reseller.

voltige is currently optimized for Claude Code and Anthropic. Support for other providers (Amazon Bedrock, Google Vertex AI or Anthropic compatible endpoints) is coming soon, join our mailing list for updates.

Yes! Voltige works with Claude Code using either a Claude subscription or an Anthropic API key. Track your savings in the Dashboard and choose a voltige plan that matches your usage tier.

If your voltige plan exceeds your Claude plan (e.g., Max vs Pro), everything works normally, consider downgrading to save money. If your voltige plan is lower (e.g., Pro vs Max), we'll optimize up to your plan's limit, then forward unoptimized requests and suggest upgrading to maximize savings.

Join developers coding more, waiting less

Start Free Trial2-week free trial · No card required